SEO is an important factor to the success of your store, and a properly configured robots.txt contributes no small part to making the job of search engine crawlers easier.

What is robots.txt?

In a nutshell, robots.txt is a file that instructs search engine crawlers on what they can or can’t crawl. Without a robots.txt in your root directory, search engine crawlers coming across your store will crawl everything that they can, and this includes duplicated or unimportant pages that you don’t want the search engine crawlers to waste their crawl budget on. A robots.txt should be able to address this.

Note: The robots.txt file should not be used to hide your webpages from Google. You should use the noindex meta tag for this purpose instead.

Default robots.txt instructions in Magento 2

By default, the robots.txt file generated by Magento contains only some basic instructions for web crawler.

# Default instructions provided by Magento User-agent: * Disallow: /lib/ Disallow: /*.php$ Disallow: /pkginfo/ Disallow: /report/ Disallow: /var/ Disallow: /catalog/ Disallow: /customer/ Disallow: /sendfriend/ Disallow: /review/ Disallow: /*SID=

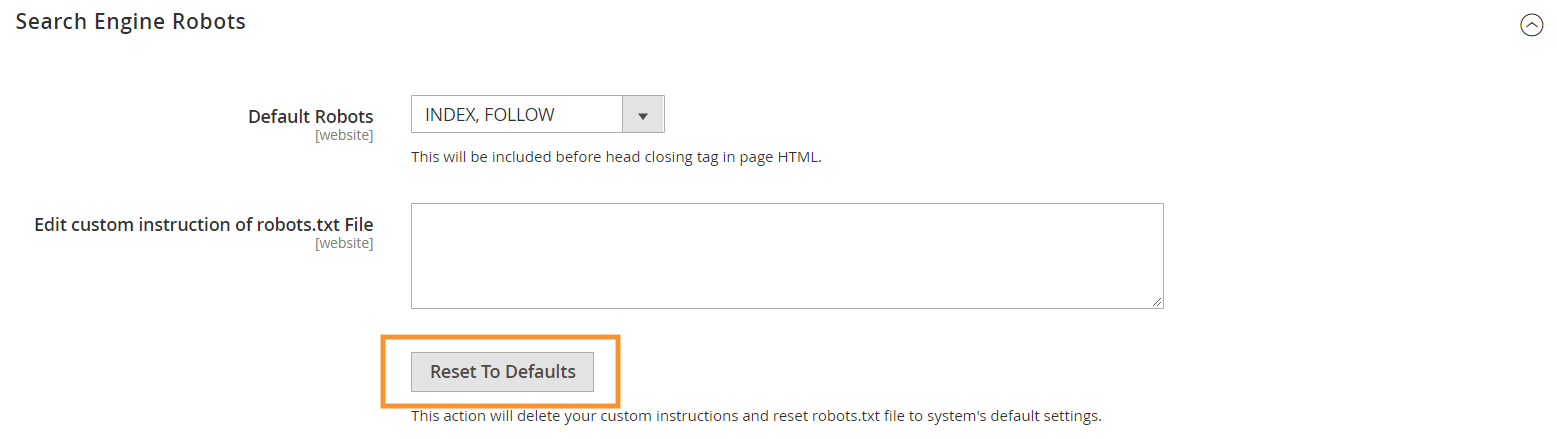

To generate these default instructions, hit the Reset to Defaults button in Search Engine Robots configuration in your Magento backend.

Why you need to make custom robots.txt instructions in Magento 2

While the default robots.txt instructions provided by Magento are necessary to tell crawlers to avoid crawling certain files that are used internally by the system, they’re not nearly enough for most Magento stores.

Search engine robots only have a finite amount of resources for crawling webpages. For a site with thousands or even millions of URLs to crawl (which is more common than you’d think), you’ll need to prioritize the type of content that needed to be crawled (with a sitemap.xml) and to disallow irrelevant pages from being crawled (with a robots.txt). The latter part is done by disallowing duplicated, irrelevant, and unnecessary pages from being crawled in your robots.txt.

Basic format of robots.txt directives

Instructions in the robots.txt are laid out in a coherent manner, friendly to non-technical users:

# Rule 1 User-agent: Googlebot Disallow: /nogooglebot/ # Rule 2 User-agent: * Allow: / Sitemap: https://www.example.com/sitemap.xml

User-agent: indicates the specific crawler that the rule is for. Some common user agents areGooglebot,Googlebot-Image,Mediapartners-Google,Googlebot-Video, etc. For an extensive list of common crawlers, see Overview of Google crawlers.

-

Allow&Disallow: specify paths that the designated crawler(s) can or cannot access. For example,Allow: /means that the crawler can access the entire site without restriction.

Sitemap: indicates the path to the sitemap for your store. Sitemap is a way to tell search engine crawlers what content to prioritize, while the rest of the content in robots.txt tell crawlers what content they can or cannot crawl.

Also in robots.txt, you can use several wildcards for path values such as:

*: When put inuser-agent, the asterisk (*) refers to all search engine crawlers (except for AdsBot crawlers) that visit the site. When used in theAllow/Disallowdirectives, it means 0 or more instances of any valid character (e.g.,Allow: /example*.cssmatches /example.css and also /example12345.css).$: designates the end of a URL. For example,Disallow: /*.php$will block all files that end with .php#: designates the start of a comment, which crawlers will ignore.

Note: Except for sitemap.xml path, paths in robots.txt are always relative, which means you can’t use full URLs (e.g., https://simicart.com/nogooglebot/) to specify pathways.

Configuring robots.txt in Magento 2

To access the robots.txt file editor, in your Magento 2 admin:

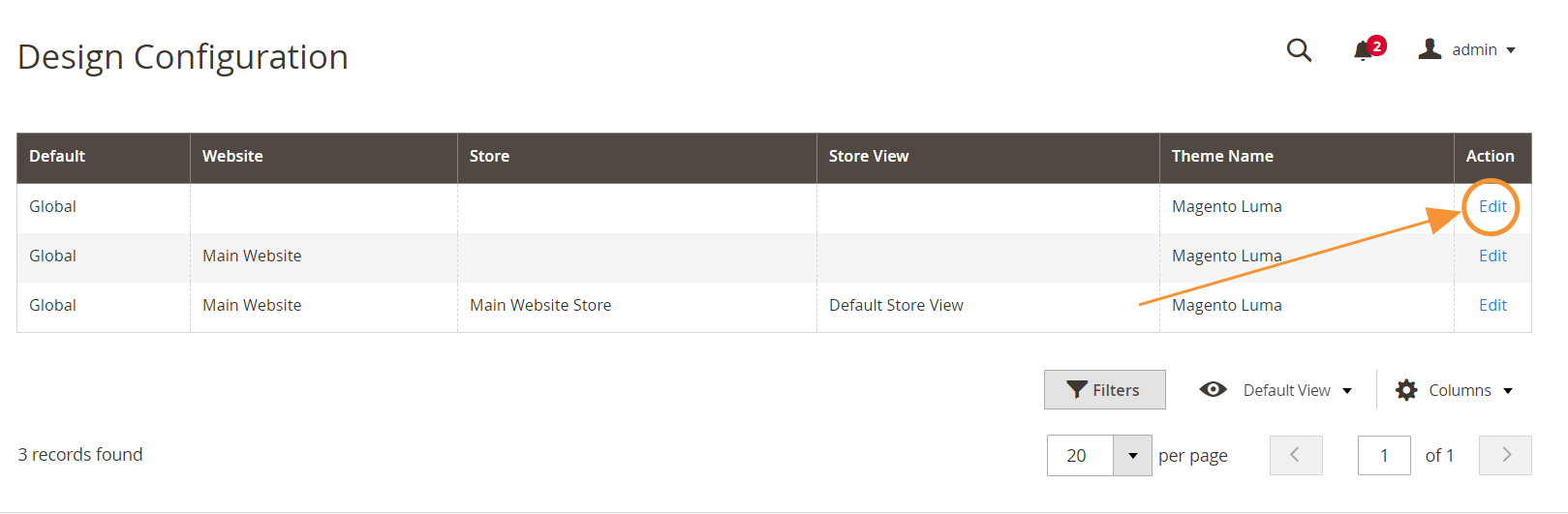

Step 1: Go to Content > Design > Configuration

Step 2: Edit the Global configuration in the first row

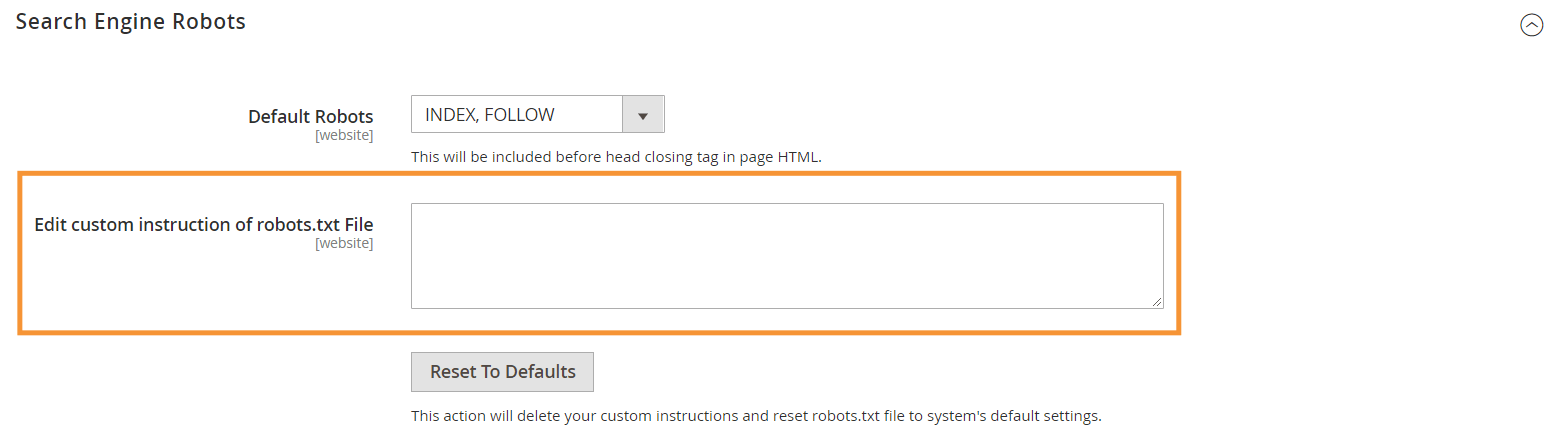

Step 3: In the Search Engine Robots section, edit custom instructions

Recommended robots.txt instructions

Here’s our recommended instructions which should fit the general needs. Of course, every store is different and you might need to tweak or add a few more rules for the best results.

User-agent: * # Default instructions: Disallow: /lib/ Disallow: /*.php$ Disallow: /pkginfo/ Disallow: /report/ Disallow: /var/ Disallow: /catalog/ Disallow: /customer/ Disallow: /sendfriend/ Disallow: /review/ Disallow: /*SID= # Disallow common Magento files in the root directory: Disallow: /cron.php Disallow: /cron.sh Disallow: /error_log Disallow: /install.php Disallow: /LICENSE.html Disallow: /LICENSE.txt Disallow: /LICENSE_AFL.txt Disallow: /STATUS.txt # Disallow User Account & Checkout Pages: Disallow: /checkout/ Disallow: /onestepcheckout/ Disallow: /customer/ Disallow: /customer/account/ Disallow: /customer/account/login/ # Disallow Catalog Search Pages: Disallow: /catalogsearch/ Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/ # Disallow URL Filter Searches Disallow: /*?dir* Disallow: /*?dir=desc Disallow: /*?dir=asc Disallow: /*?limit=all Disallow: /*?mode* # Disallow CMS Directories: Disallow: /app/ Disallow: /bin/ Disallow: /dev/ Disallow: /lib/ Disallow: /phpserver/ Disallow: /pub/ # Disallow Duplicate Content: Disallow: /tag/ Disallow: /review/ Disallow: /*?*product_list_mode= Disallow: /*?*product_list_order= Disallow: /*?*product_list_limit= Disallow: /*?*product_list_dir= # Server Settings # Disallow general technical directories and files on a server Disallow: /cgi-bin/ Disallow: /cleanup.php Disallow: /apc.php Disallow: /memcache.php Disallow: /phpinfo.php # Disallow version control folders and others Disallow: /*.git Disallow: /*.CVS Disallow: /*.Zip$ Disallow: /*.Svn$ Disallow: /*.Idea$ Disallow: /*.Sql$ Disallow: /*.Tgz$ Sitemap: https://www.example.com/sitemap.xml

However, in default Magento 2, it is restricted because you cannot alter robot.txt file for each page. Sometimes it’s crucial to include a Robot Meta Tag on a page since you might not want Google to index some irrelevant URLs.

Therefore, the only item that can provide you with valuable features to make Robot Meta tag advanced is this all-in-one Magento 2 SEO extension. Check it out now!

Conclusion

Creating a robots.txt file is only one of the many steps in Magento SEO checklist—and to properly optimize a Magento store for search engines sure isn’t an easy task for most store owners. If you don’t find yourself wanting to deal with this, we can take care of everything for you. Here at SimiCart, we provide SEO and speed optimization services which guarantee the best results for your store.

Thanks for sharing this valuable information. It’s clear that having a well-structured robots.txt file is crucial for managing how search engine crawlers interact with website. Your explanation and the recommended instructions is quite helpful. I’m sure this article will be valuable to many website owners looking to enhance their online visibility.

Hi,

Do you provide private consultations and training for magento 2 wordle seo?

The idea of sharing the detail about the Configure Magento 2 robots.txt File for SEO is good and I saw people can get help here. The Melbourne Pressure Cleaning Pros are good to guide us about it.

Join the ranks of Wordle enthusiasts worldwide and compete for the fastest solve times.